Latest recommendations

| Id | Title | Authors | Abstract | Picture | Thematic fields | Recommender | Reviewers | Submission date▼ | |

|---|---|---|---|---|---|---|---|---|---|

02 Sep 2023

Research workflows, paradata, and information visualisation: feedback on an exploratory integration of issues and practices - MEMORIA ISDudek Iwona, Blaise Jean-Yves https://doi.org/10.5281/zenodo.8311129Using information visualisation to improve traceability, transmissibility and verifiability in research workflowsRecommended by Isto Huvila based on reviews by Adéla Sobotkova and 2 anonymous reviewersThe paper “Research workflows, paradata, and information visualisation: feedback on an exploratory integration of issues and practices - MEMORIA IS” (Dudek & Blaise, 2023) describes a prototype of an information system developed to improve the traceability, transmissibility and verifiability of archaeological research workflows. A key aspect of the work with MEMORIA is to make research documentation and the workflows underpinning the conducted research more approachable and understandable using a series of visual interfaces that allow users of the system to explore archaeological documentation, including metadata describing the data and paradata that describes its underlying processes. The work of Dudek and Blaise address one of the central barriers to reproducibility and transparency of research data and propose a set of both theoretically and practically well-founded tools and methods to solve this major problem. From the reported work on MEMORIA IS, information visualisation and the proposed tools emerge as an interesting and potentially powerful approach for a major push in improving the traceability, transmissibility and verifiability of research data through making research workflows easier to approach and understand. In comparison to technical work relating to archaeological data management, this paper starts commendably with a careful explication of the conceptual and epistemic underpinnings of the MEMORIA IS both in documentation research, knowledge organisation and information visualisation literature. Rather than being developed on the basis of a set of opaque assumptions, the meticulous description of the MEMORIA IS and its theoretical and technical premises is exemplary in its transparence and richness and has potential for a long-term impact as a part of the body of literature relating to the development of archaeological documentation and documentation tools. While the text is sometimes fairly densely written, it is worth taking the effort to read it through. Another major strength of the paper is that it provides a rich set of examples of the workings of the prototype system that makes it possible to develop a comprehensive understanding of the proposed approaches and assess their validity. As a whole, this paper and the reported work on MEMORIA IS forms a worthy addition to the literature on and practical work for developing critical infrastructures for data documentation, management and access in archaeology. Beyond archaeology and the specific context of the discussed work discussed this paper has obvious relevance to comparable work in other fields. ReferencesDudek, I. and Blaise, J.-Y. (2023) Research workflows, paradata, and information visualisation: feedback on an exploratory integration of issues and practices - MEMORIA IS, Zenodo, 8252923, ver. 3 peer-reviewed and recommended by Peer Community in Archaeology. https://doi.org/10.5281/zenodo.8252923

| Research workflows, paradata, and information visualisation: feedback on an exploratory integration of issues and practices - MEMORIA IS | Dudek Iwona, Blaise Jean-Yves | <p>The paper presents an exploratory web information system developed as a reaction to practical and epistemological questions, in the context of a scientific unit studying the architectural heritage (from both historical sciences perspective, and... |  | Computational archaeology | Isto Huvila | 2023-05-02 12:50:39 | View | |

01 Sep 2023

Zooarchaeological investigation of the Hoabinhian exploitation of reptiles and amphibians in Thailand and Cambodia with a focus on the Yellow-headed tortoise (Indotestudo elongata (Blyth, 1854))Corentin Bochaton, Sirikanya Chantasri, Melada Maneechote, Julien Claude, Christophe Griggo, Wilailuck Naksri, Hubert Forestier, Heng Sophady, Prasit Auertrakulvit, Jutinach Bowonsachoti, Valery Zeitoun https://doi.org/10.1101/2023.04.27.538552A zooarchaeological perspective on testudine bones from Hoabinhian hunter-gatherer archaeological assemblages in Southeast AsiaRecommended by Ruth Blasco based on reviews by Noel Amano and Iratxe Boneta based on reviews by Noel Amano and Iratxe Boneta

The study of the evolution of the human diet has been a central theme in numerous archaeological and paleoanthropological investigations. By reconstructing diets, researchers gain deeper insights into how humans adapted to their environments. The analysis of animal bones plays a crucial role in extracting dietary information. Most studies involving ancient diets rely heavily on zooarchaeological examinations, which, due to their extensive history, have amassed a wealth of data. During the Pleistocene–Holocene periods, testudine bones have been commonly found in a multitude of sites. The use of turtles and tortoises as food sources appears to stretch back to the Early Pleistocene [1-4]. More importantly, these small animals play a more significant role within a broader debate. The exploitation of tortoises in the Mediterranean Basin has been examined through the lens of optimal foraging theory and diet breadth models (e.g. [5-10]). According to the diet breadth model, resources are incorporated into diets based on their ranking and influenced by factors such as net return, which in turn depends on caloric value and search/handling costs [11]. Within these theoretical frameworks, tortoises hold a significant position. Their small size and sluggish movement require minimal effort and relatively simple technology for procurement and processing. This aligns with optimal foraging models in which the low handling costs of slow-moving prey compensate for their small size [5-6,9]. Tortoises also offer distinct advantages. They can be easily transported and kept alive, thereby maintaining freshness for deferred consumption [12-14]. For example, historical accounts suggest that Mexican traders recognised tortoises as portable and storable sources of protein and water [15]. Furthermore, tortoises provide non-edible resources, such as shells, which can serve as containers. This possibility has been discussed in the context of Kebara Cave [16] and noted in ethnographic and historical records (e.g. [12]). However, despite these advantages, their slow growth rate might have rendered intensive long-term predation unsustainable. While tortoises are well-documented in the Southeast Asian archaeological record, zooarchaeological analyses in this region have been limited, particularly concerning prehistoric hunter-gatherer populations that may have relied extensively on inland chelonian taxa. With the present paper Bochaton et al. [17] aim to bridge this gap by conducting an exhaustive zooarchaeological analysis of turtle bone specimens from four Hoabinhian hunter-gatherer archaeological assemblages in Thailand and Cambodia. These assemblages span from the Late Pleistocene to the first half of the Holocene. The authors focus on bones attributed to the yellow-headed tortoise (Indotestudo elongata), which is the most prevalent taxon in the assemblages. The research include osteometric equations to estimate carapace size and explore population structures across various sites. The objective is to uncover human tortoise exploitation strategies in the region, and the results reveal consistent subsistence behaviours across diverse locations, even amidst varying environmental conditions. These final proposals suggest the possibility of cultural similarities across different periods and regions in continental Southeast Asia. In summary, this paper [17] represents a significant advancement in the realm of zooarchaeological investigations of small prey within prehistoric communities in the region. While certain approaches and issues may require further refinement, they serve as a comprehensive and commendable foundation for assessing human hunting adaptations.

References [1] Hartman, G., 2004. Long-term continuity of a freshwater turtle (Mauremys caspica rivulata) population in the northern Jordan Valley and its paleoenvironmental implications. In: Goren-Inbar, N., Speth, J.D. (Eds.), Human Paleoecology in the Levantine Corridor. Oxbow Books, Oxford, pp. 61-74. https://doi.org/10.2307/j.ctvh1dtct.11 [2] Alperson-Afil, N., Sharon, G., Kislev, M., Melamed, Y., Zohar, I., Ashkenazi, R., Biton, R., Werker, E., Hartman, G., Feibel, C., Goren-Inbar, N., 2009. Spatial organization of hominin activities at Gesher Benot Ya'aqov, Israel. Science 326, 1677-1680. https://doi.org/10.1126/science.1180695 [3] Archer, W., Braun, D.R., Harris, J.W., McCoy, J.T., Richmond, B.G., 2014. Early Pleistocene aquatic resource use in the Turkana Basin. J. Hum. Evol. 77, 74-87. https://doi.org/10.1016/j.jhevol.2014.02.012 [4] Blasco, R., Blain, H.A., Rosell, J., Carlos, D.J., Huguet, R., Rodríguez, J., Arsuaga, J.L., Bermúdez de Castro, J.M., Carbonell, E., 2011. Earliest evidence for human consumption of tortoises in the European Early Pleistocene from Sima del Elefante, Sierra de Atapuerca, Spain. J. Hum. Evol. 11, 265-282. https://doi.org/10.1016/j.jhevol.2011.06.002 [5] Stiner, M.C., Munro, N., Surovell, T.A., Tchernov, E., Bar-Yosef, O., 1999. Palaeolithic growth pulses evidenced by small animal exploitation. Science 283, 190-194. https://doi.org/10.1126/science.283.5399.190 [6] Stiner, M.C., Munro, N.D., Surovell, T.A., 2000. The tortoise and the hare: small-game use, the Broad-Spectrum Revolution, and paleolithic demography. Curr. Anthropol. 41, 39-73. https://doi.org/10.1086/300102 [7] Stiner, M.C., 2001. Thirty years on the “Broad Spectrum Revolution” and paleolithic demography. Proc. Natl. Acad. Sci. U. S. A. 98 (13), 6993-6996. https://doi.org/10.1073/pnas.121176198 [8] Stiner, M.C., 2005. The Faunas of Hayonim Cave (Israel): a 200,000-Year Record of Paleolithic Diet. Demography and Society. American School of Prehistoric Research, Bulletin 48. Peabody Museum Press, Harvard University, Cambridge. [9] Stiner, M.C., Munro, N.D., 2002. Approaches to prehistoric diet breadth, demography, and prey ranking systems in time and space. J. Archaeol. Method Theory 9, 181-214. https://doi.org/10.1023/A:1016530308865 [10] Blasco, R., Cochard, D., Colonese, A.C., Laroulandie, V., Meier, J., Morin, E., Rufà, A., Tassoni, L., Thompson, J.C. 2022. Small animal use by Neanderthals. In Romagnoli, F., Rivals, F., Benazzi, S. (eds.), Updating Neanderthals: Understanding Behavioral Complexity in the Late Middle Palaeolithic. Elsevier Academic Press, pp. 123-143. ISBN 978-0-12-821428-2. https://doi.org/10.1016/C2019-0-03240-2 [11] Winterhalder, B., Smith, E.A., 2000. Analyzing adaptive strategies: human behavioural ecology at twenty-five. Evol. Anthropol. 9, 51-72. https://doi.org/10.1002/(sici)1520-6505(2000)9:2%3C51::aid-evan1%3E3.0.co;2-7 [12] Schneider, J.S., Everson, G.D., 1989. The Desert Tortoise (Xerobates agassizii) in the Prehistory of the Southwestern Great Basin and Adjacent areas. J. Calif. Gt. Basin Anthropol. 11, 175-202. http://www.jstor.org/stable/27825383 [13] Thompson, J.C., Henshilwood, C.S., 2014b. Nutritional values of tortoises relative to ungulates from the Middle Stone Age levels at Blombos Cave, South Africa: implications for foraging and social behaviour. J. Hum. Evol. 67, 33-47. https://doi.org/10.1016/j.jhevol.2013.09.010 [14] Blasco, R., Rosell, J., Smith, K.T., Maul, L.Ch., Sañudo, P., Barkai, R., Gopher, A. 2016. Tortoises as a Dietary Supplement: a view from the Middle Pleistocene site of Qesem Cave, Israel. Quat Sci Rev 133, 165-182. https://doi.org/10.1016/j.quascirev.2015.12.006 [15] Pepper, C., 1963. The truth about the tortoise. Desert Mag. 26, 10-11. [16] Speth, J.D., Tchernov, E., 2002. Middle Paleolithic tortoise use at Kebara Cave (Israel). J. Archaeol. Sci. 29, 471-483. https://doi.org/10.1006/jasc.2001.0740 [17] Bochaton, C., Chantasri, S., Maneechote, M., Claude, J., Griggo, C., Naksri, W., Forestier, H., Sophady, H., Auertrakulvit, P., Bowonsachoti, J. and Zeitoun, V. (2023) Zooarchaeological investigation of the Hoabinhian exploitation of reptiles and amphibians in Thailand and Cambodia with a focus on the Yellow-headed Tortoise (Indotestudo elongata (Blyth, 1854)), BioRXiv, 2023.04.27.538552 , ver. 3 peer-reviewed and recommended by Peer Community in Archaeology. https://doi.org/10.1101/2023.04.27.538552v3 | Zooarchaeological investigation of the Hoabinhian exploitation of reptiles and amphibians in Thailand and Cambodia with a focus on the Yellow-headed tortoise (*Indotestudo elongata* (Blyth, 1854)) | Corentin Bochaton, Sirikanya Chantasri, Melada Maneechote, Julien Claude, Christophe Griggo, Wilailuck Naksri, Hubert Forestier, Heng Sophady, Prasit Auertrakulvit, Jutinach Bowonsachoti, Valery Zeitoun | <p style="text-align: justify;">While non-marine turtles are almost ubiquitous in the archaeological record of Southeast Asia, their zooarchaeological examination has been inadequately pursued within this tropical region. This gap in research hind... |  | Asia, Taphonomy, Zooarchaeology | Ruth Blasco | Iratxe Boneta, Noel Amano | 2023-05-02 09:30:50 | View |

25 Jul 2023

Sorghum and finger millet cultivation during the Aksumite period: insights from ethnoarchaeological modelling and microbotanical analysisAbel Ruiz-Giralt, Alemseged Beldados, Stefano Biagetti, Francesca D’Agostini, A. Catherine D’Andrea, Yemane Meresa, Carla Lancelotti https://doi.org/10.5281/zenodo.7859673An innovative integration of ethnoarchaeological models with phytolith data to study histories of C4 crop cultivationRecommended by Emma Loftus based on reviews by Tanya Hattingh and 1 anonymous reviewerThis article “Sorghum and finger millet cultivation during the Aksumite period: insights from ethnoarchaeological modelling and microbotanical analysis”, submitted by Ruiz-Giralt and colleagues (2023a), presents an innovative attempt to address the lack of palaeobotanical data concerning ancient agricultural strategies in the northern Horn of Africa. In lieu of well-preserved macrobotanical remains, an especial problem for C4 crop species, these authors leverage microbotanical remains (phytoliths), in combination with ethnoarchaeologically-informed agroecology models to investigate finger millet and sorghum cultivation during the period of the Aksumite Kingdom (c. 50 BCE – 800 CE). Both finger millet and sorghum have played important roles in the subsistence of the Horn region, and throughout much of the rest of Africa and the world in the past. The importance of these drought-resistant and adaptable crops is likely to increase as we move into a warmer, drier world. Yet their histories of cultivation are still only approximately sketched due to a paucity of well-preserved remains from archaeological sites - for example, debate continues as to the precise centre of their domestication. Recent studies of phytoliths (by these and other authors) are demonstrating the likely continuous presence of these crops from the pre-Aksumite period. However, phytoliths are diagnostic only to broad taxonomic levels, and cannot be used to securely identify species. To supplement these observations, Ruiz-Giralt et al. deploy models (previously developed by this team: Ruiz-Giralt et al., 2023b) that incorporate environmental variables and ethnographic data on traditional agrosystems. They evaluate the feasibility of different agricultural regimes around the locations of numerous archaeological sites distributed across the highlands of northern Ethiopia and southern Eritrea. Their results indicate the general viability of finger millet and sorghum cultivation around archaeological settlements in the past, with various regions displaying greater-or-lesser suitability at different distances from the site itself. The models also highlight the likelihood of farmers utilising extensive-rainfed regimes, given low water and soil nutrient requirements for these crops. The authors discuss the results with respect to data on phytolith assemblages, particularly at the site of Ona Adi. They conclude that Aksumite agriculture very likely included the cultivation of finger millet and sorghum, as part of a broader system of rainfed cereal cultivation. Ruiz-Giralt et al. argue, and have demonstrated, that ethnoarchaeologically-informed models can be used to generate hypotheses to be evaluated against archaeological data. The integration of many diverse lines of information in this paper certainly enriches the discussion of agricultural possibilities in the past, and the use of a modelling framework helps to formalise the available hypotheses. However, they emphasise that modelling approaches cannot be pursued in lieu of rigorous archaeobotanical studies but only in tandem - a greater commitment to archaeobotanical sampling is required in the region if we are to fully detail the histories of these important crops. References Ruiz-Giralt, A., Beldados, A., Biagetti, S., D’Agostini, F., D’Andrea, A. C., Meresa, Y. and Lancelotti, C. (2023a). Sorghum and finger millet cultivation during the Aksumite period: insights from ethnoarchaeological modelling and microbotanical analysis. Zenodo, 7859673, ver. 3 peer-reviewed and recommended by Peer Community in Archaeology. https://doi.org/10.5281/zenodo.7859673 Ruiz-Giralt, A., Biagetti, S., Madella, M. and Lancelotti, C. (2023b). Small-scale farming in drylands: New models for resilient practices of millet and sorghum cultivation. PLoS ONE 18, e0268120. https://doi.org/10.1371/journal.pone.0268120

| Sorghum and finger millet cultivation during the Aksumite period: insights from ethnoarchaeological modelling and microbotanical analysis | Abel Ruiz-Giralt, Alemseged Beldados, Stefano Biagetti, Francesca D’Agostini, A. Catherine D’Andrea, Yemane Meresa, Carla Lancelotti | <p>For centuries, finger millet (<em>Eleusine coracana</em> Gaertn.) and sorghum (<em>Sorghum bicolor</em> (L.) Moench) have been two of the most economically important staple crops in the northern Horn of Africa. Nonetheless, their agricultural h... | Africa, Archaeobotany, Computational archaeology, Protohistory, Spatial analysis | Emma Loftus | 2023-04-29 16:24:54 | View | ||

Today

Exploiting RFID Technology and Robotics in the MuseumAntonis G. Dimitriou, Stella Papadopoulou, Maria Dermenoudi, Angeliki Moneda, Vasiliki Drakaki, Andreana Malama, Alexandros Filotheou, Aristidis Raptopoulos Chatzistefanou, Anastasios Tzitzis, Spyros Megalou, Stavroula Siachalou, Aggelos Bletsas, Traianos Yioultsis, Anna Maria Velentza, Sofia Pliasa, Nikolaos Fachantidis, Evangelia Tsangaraki, Dimitrios Karolidis, Charalampos Tsoungaris, Panagiota Balafa and Angeliki Koukouvou https://doi.org/10.5281/zenodo.7805387Social Robotics in the Museum: a case for human-robot interaction using RFID TechnologyRecommended by Daniel Carvalho based on reviews by Dominik Hagmann, Sebastian Hageneuer and Alexis PantosThe paper “Exploiting RFID Technology and Robotics in the Museum” (Dimitriou et al 2023) is a relevant contribution to museology and an interface between the public, archaeological discourse and the field of social robotics. It deals well with these themes and is concise in its approach, with a strong visual component that helps the reader to understand what is at stake. The option of demonstrating the different steps that lead to the final construction of the robot is appropriate, so that it is understood that it really is a linked process and not simple tasks that have no connection. The use of RFID technology for topological movement of social robots has been continuously developed (e.g., Corrales and Salichs 2009; Turcu and Turcu 2012; Sequeira and Gameiro 2017) and shown to have advantages for these environments. Especially in the context of a museum, with all the necessary precautions to avoid breaching the public's privacy, RFID labels are a viable, low-cost solution, as the authors point out (Dimitriou et al 2023), and, above all, one that does not require the identification of users. It is in itself part of an ambitious project, since the robot performs several functions and not just one, a development compared to other currents within social robotics (see Hellou et al 2022: 1770 for a description of the tasks given to robots in museums). The robotic system itself also makes effective use of the localization system, both physically, by RFID labels and by knowing how to situate itself with the public visiting the museum, adapting to their needs, which is essential for it to be successful (see Gasteiger, Hellou and Ahn 2022: 690 for the theme of localization). Archaeology can provide a threshold of approaches when it comes to social robotics and this project demonstrates that, bringing together elements of interaction, education and mobility in a single method. Hence, this is a paper with great merit and deserves to be recommended as it allows us to think of the museum as a space where humans and non-humans can converge to create intelligible discourses, whether in the historical, archaeological or cultural spheres. References Dimitriou, A. G., Papadopoulou, S., Dermenoudi, M., Moneda, A., Drakaki, V., Malama, A., Filotheou, A., Raptopoulos Chatzistefanou, A., Tzitzis, A., Megalou, S., Siachalou, S., Bletsas, A., Yioultsis, T., Velentza, A. M., Pliasa, S., Fachantidis, N., Tsagkaraki, E., Karolidis, D., Tsoungaris, C., Balafa, P. and Koukouvou, A. (2024). Exploiting RFID Technology and Robotics in the Museum. Zenodo, 7805387, ver. 3 peer-reviewed and recommended by Peer Community in Archaeology. https://doi.org/10.5281/zenodo.7805387 Corrales, A. and Salichs, M.A. (2009). Integration of a RFID System in a Social Robot. In: Kim, JH., et al. Progress in Robotics. FIRA 2009. Communications in Computer and Information Science, vol 44. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-03986-7_8 Gasteiger, N., Hellou, M. and Ahn, H.S. (2023). Factors for Personalization and Localization to Optimize Human–Robot Interaction: A Literature Review. Int J of Soc Robotics 15, 689–701. https://doi.org/10.1007/s12369-021-00811-8 Hellou, M., Lim, J., Gasteiger, N., Jang, M. and Ahn, H. (2022). Technical Methods for Social Robots in Museum Settings: An Overview of the Literature. Int J of Soc Robotics 14, 1767–1786 (2022). https://doi.org/10.1007/s12369-022-00904-y Sequeira, J. S., and Gameiro, D. (2017). A Probabilistic Approach to RFID-Based Localization for Human-Robot Interaction in Social Robotics. Electronics, 6(2), 32. MDPI AG. http://dx.doi.org/10.3390/electronics6020032 Turcu, C. and Turcu, C. (2012). The Social Internet of Things and the RFID-based robots. In: IV International Congress on Ultra Modern Telecommunications and Control Systems, St. Petersburg, Russia, 2012, pp. 77-83. https://doi.org/10.1109/ICUMT.2012.6459769 | Exploiting RFID Technology and Robotics in the Museum | Antonis G. Dimitriou, Stella Papadopoulou, Maria Dermenoudi, Angeliki Moneda, Vasiliki Drakaki, Andreana Malama, Alexandros Filotheou, Aristidis Raptopoulos Chatzistefanou, Anastasios Tzitzis, Spyros Megalou, Stavroula Siachalou, Aggelos Bletsas, ... | <p>This paper summarizes the adoption of new technologies in the Archaeological Museum of Thessaloniki, Greece. RFID technology has been adopted. RFID tags have been attached to the artifacts. This allows for several interactions, including tracki... |  | Conservation/Museum studies, Remote sensing | Daniel Carvalho | 2023-04-10 14:04:23 | View | |

04 Oct 2023

IUENNA – openIng the soUthErn jauNtal as a micro-regioN for future Archaeology: A "para-description"Hagmann, Dominik; Reiner, Franziska https://doi.org/10.31219/osf.io/5vwg8The IUENNA project: integrating old data and documentation for future archaeologyRecommended by Ronald Visser based on reviews by Nina Richards and 3 anonymous reviewers based on reviews by Nina Richards and 3 anonymous reviewers

This recommended paper on the IUENNA project (Hagmann and Reiner 2023) is not a paper in the traditional sense, but it is a reworked version of a project proposal. It is refreshing to read about a project that has just started and see what the aims of the project are. This ties in with several open science ideas and standards (e.g. Brinkman et al. 2023). I am looking forward to see in a few years how the authors managed to reach the aims and goals of the project. The IUENNA project deals with the legacy data and old excavations on the Hemmaberg and in the Jauntal. Archaeological research in this small, but important region, has taken place for more than a century, revealing material from over 2000 years of human history. The Hemmaberg is well known for its late antique and early medieval structures, such as roads, villas and the various churches. The wider Jauntal reveals archaeological finds and features dating from the Iron Age to the recent past. The authors of the paper show the need to make sure that the documentation and data of these past archaeological studies and projects will be accessible in the future, or in their own words: "Acute action is needed to systematically transition these datasets from physical filing cabinets to a sustainable, networked virtual environment for long-term use" (Hagmann and Reiner 2023: 5). The papers clearly shows how this initiative fits within larger developments in both Digital Archaeology and the Digital Humanities. In addition, the project is well grounded within Austrian archaeology. While the project ties in with various international standards and initiatives, such as Ariadne (https://ariadne-infrastructure.eu/) and FAIR-data standards (Wilkinson et al. 2016, 2019), it would benefit from the long experience institutes as the ADS (https://archaeologydataservice.ac.uk/) and DANS (see Data Station Archaeology: https://dans.knaw.nl/en/data-stations/archaeology/) have on the storage of archaeological data. I would also like to suggest to have a look at the Dutch SIKB0102 standard (https://www.sikb.nl/datastandaarden/richtlijnen/sikb0102) for the exchange of archaeological data. The documentation is all in Dutch, but we wrote an English paper a few years back that explains the various concepts (Boasson and Visser 2017). However, these are a minor details or improvements compared to what the authors show in their project proposal. The integration of many standards in the project and the use of open software in a well-defined process is recommendable. The IUENNA project is an ambitious project, which will hopefully lead to better insights, guidelines and workflows on dealing with legacy data or documentation. These lessons will hopefully benefit archaeology as a discipline. This is important, because various (European) countries are dealing with similar problem, since many excavations of the past have never been properly published, digitalized or deposited. In the Netherlands, for example, various projects dealt with publication of legacy excavations in the Odyssee-project (https://www.nwo.nl/onderzoeksprogrammas/odyssee). This has led to the publication of various books and datasets (24) (https://easy.dans.knaw.nl/ui/datasets/id/easy-dataset:34359), but there are still many datasets (8) missing from the various projects. In addition, each project followed their own standards in creating digital data, while IUENNA will make an effort to standardize this. There are still more than 1000 Dutch legacy excavations still waiting to be published and made into a modern dataset (Kleijne 2010) and this is probably the case in many other countries. I sincerely hope that a successful end of IUENNA will be an inspiration for other regions and countries for future safekeeping of legacy data. References Boasson, W and Visser, RM. 2017 SIKB0102: Synchronizing Excavation Data for Preservation and Re-Use. Studies in Digital Heritage 1(2): 206–224. https://doi.org/10.14434/sdh.v1i2.23262 Brinkman, L, Dijk, E, Jonge, H de, Loorbach, N and Rutten, D. 2023 Open Science: A Practical Guide for Early-Career Researchers https://doi.org/10.5281/zenodo.7716153 Hagmann, D and Reiner, F. 2023 IUENNA – openIng the soUthErn jauNtal as a micro-regioN for future Archaeology: A ‘para-description’. https://doi.org/10.31219/osf.io/5vwg8 Kleijne, JP. 2010. Odysee in de breedte. Verslag van het NWO Odyssee programma, kortlopend onderzoek: ‘Odyssee, een oplossing in de breedte: de 1000 onuitgewerkte sites, die tot een substantiële kennisvermeerdering kunnen leiden, digitaal beschikbaar!’ ‐ ODYK‐09‐13. Den Haag: E‐depot Nederlandse Archeologie (EDNA). https://doi.org/10.17026/dans-z25-g4jw Wilkinson, MD, Dumontier, M, Aalbersberg, IjJ, Appleton, G, Axton, M, Baak, A, Blomberg, N, Boiten, J-W, da Silva Santos, LB, Bourne, PE, Bouwman, J, Brookes, AJ, Clark, T, Crosas, M, Dillo, I, Dumon, O, Edmunds, S, Evelo, CT, Finkers, R, Gonzalez-Beltran, A, Gray, AJG, Groth, P, Goble, C, Grethe, JS, Heringa, J, ’t Hoen, PAC, Hooft, R, Kuhn, T, Kok, R, Kok, J, Lusher, SJ, Martone, ME, Mons, A, Packer, AL, Persson, B, Rocca-Serra, P, Roos, M, van Schaik, R, Sansone, S-A, Schultes, E, Sengstag, T, Slater, T, Strawn, G, Swertz, MA, Thompson, M, van der Lei, J, van Mulligen, E, Velterop, J, Waagmeester, A, Wittenburg, P, Wolstencroft, K, Zhao, J and Mons, B. 2016 The FAIR Guiding Principles for scientific data management and stewardship. Scientific Data 3(1): 160018. https://doi.org/10.1038/sdata.2016.18 Wilkinson, MD, Dumontier, M, Jan Aalbersberg, I, Appleton, G, Axton, M, Baak, A, Blomberg, N, Boiten, J-W, da Silva Santos, LB, Bourne, PE, Bouwman, J, Brookes, AJ, Clark, T, Crosas, M, Dillo, I, Dumon, O, Edmunds, S, Evelo, CT, Finkers, R, Gonzalez-Beltran, A, Gray, AJG, Groth, P, Goble, C, Grethe, JS, Heringa, J, Hoen, PAC ’t, Hooft, R, Kuhn, T, Kok, R, Kok, J, Lusher, SJ, Martone, ME, Mons, A, Packer, AL, Persson, B, Rocca-Serra, P, Roos, M, van Schaik, R, Sansone, S-A, Schultes, E, Sengstag, T, Slater, T, Strawn, G, Swertz, MA, Thompson, M, van der Lei, J, van Mulligen, E, Jan Velterop, Waagmeester, A, Wittenburg, P, Wolstencroft, K, Zhao, J and Mons, B. 2019 Addendum: The FAIR Guiding Principles for scientific data management and stewardship. Scientific Data 6(1): 6. https://doi.org/10.1038/s41597-019-0009-6

| IUENNA – openIng the soUthErn jauNtal as a micro-regioN for future Archaeology: A "para-description" | Hagmann, Dominik; Reiner, Franziska | <p>The Go!Digital 3.0 project IUENNA – an acronym for “openIng the soUthErn jauNtal as a micro-regioN for future Archaeology” – embraces a comprehensive open science methodology. It focuses on the archaeological micro-region of the Jauntal Valley ... |  | Antiquity, Classic, Computational archaeology | Ronald Visser | 2023-04-06 13:36:16 | View | |

05 Jan 2024

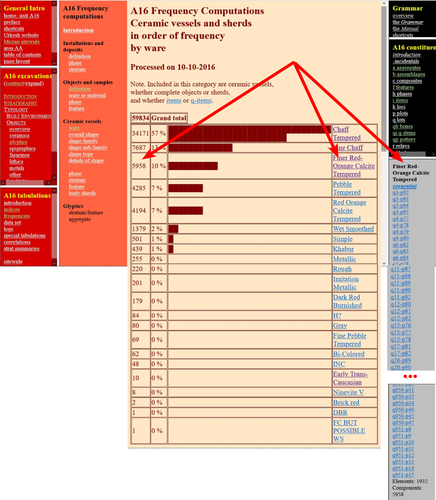

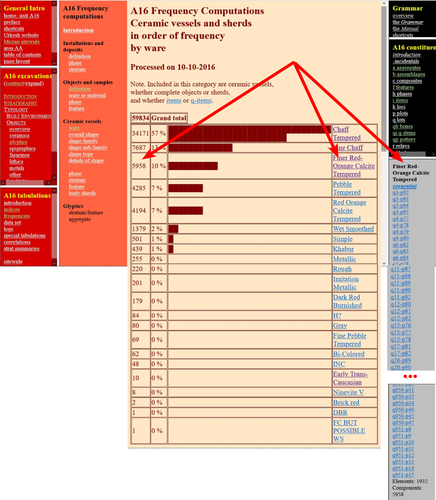

The Density of Types and the Dignity of the Fragment. A Website Approach to Archaeological Typology.Giorgio Buccellati and Marilyn Kelly-Buccellati https://doi.org/10.5281/zenodo.7743834Roster and Lexicon – A Radical Digital-Dialogical Approach to Questions of Typology and Categorization in ArchaeologyRecommended by Shumon Tobias Hussain , Felix Riede and Sébastien Plutniak , Felix Riede and Sébastien Plutniak based on reviews by Dominik Hagmann and 2 anonymous reviewers based on reviews by Dominik Hagmann and 2 anonymous reviewers

“The density of types and the dignity of the fragment. A website approach to archaeological typology” by G. Buccellati and M. Kelly-Buccellati (1) is a contribution to the rapidly growing literature on digital approaches to archaeological data management, expertly showcasing the significant theoretical and epistemological impetus of such work. The authors offer a conceptually lucid discussion of key concepts in archaeological ordering practices surrounding the longstanding tension between so-called ‘etic’ and ‘emic’ approaches, thereby providing a thorough systematic of how to think through sameness and difference in the context of voluminous digital archaeological data. As a point of departure, the authors reconsider the relationship between archaeological fragments – spatiotemporally bounded artefacts and features – and their larger meaning-giving totality as the primary locus of archaeological knowledge. Typology can then be said to serve this overriding quest to resolve the conflict between parts and wholes, as the parts themselves are never sufficient to render the whole but the whole remains elusive without reference to the parts. Buccellati and Kelly-Buccellati here make an interesting point about the importance to register the globality of the archaeological record – that is, literally everything encountered in the soil – without making any prior choices as to what supposedly matters and what not. The distinctiveness of the archaeological enterprise, according to them, indeed consists of the circumstance that merely disconnected fragments come to the attention of archaeologists and the only objective data that can be attained, because of this, are about the situated location of fragments in the ground and their relation to other fragments – what they call ‘emplacement’. This, we would add, includes the relationship of fragments with human observers and the employed methods of excavation as observation. As the authors say: “[i]t is in this sense that the fragments are natively digital: they are atoms that do not cohere into a typological whole”. The systematic exploration of how the so recovered fragments may be re-articulated is then essentially the goal of archaeological categorization and typology but these practices can only ever be successful if the whole context of original ‘emplacement’ is carefully taken into consideration. This reconstruction of the fundamental epistemological situation archaeology finds itself in leads the authors to a general rejection of ‘more’ vs. ‘less’ objective or even subjective ordering practices as such qualifications tend to miss the point. What matters is to enable the flexible and scalable confrontation of isolated archaeological fragments, to do experiment with and test different part-whole relations and their possible knowledge contributions. It is no coincidence that the authors insist on a dynamical approach to ordering practices and type-thinking in archaeology here, which in many ways comes often very close to the general conceptual orientation philosopher Stephen C. Pepper (2) has called ‘organicism’ – a preoccupation of resolving the tension between heterogeneous fragments and coherent wholes without losing sight of the specificity of each single fragment. In the view of organicist thinkers, and the authors seem to share this recognition, to take complexity seriously means to centre the dialectics between fragments and wholes in their entirety. This notion is directly reflected in the authors’ interesting definition of ‘big data’ in archaeology as a multi-layered and multi-referential system of organizing the totality of observations of emplacement (the Global record). Based on this broader exposition, Buccellati and Kelly-Buccellati make some perceptive and noteworthy observations vis-à-vis the aforementioned emic-etic distinction that has caused so much archaeological confusion and debate (3–6). To begin with, emic and etic designate different systemic logics of organizing observable sameness and difference. Emic systems are closed and foreground the idea of the roster, they recognize only a limited set of types whose identity depends on relative differences. Etic systems, on the other hand, are in principle open (and even open-ended) and rely on the notion of the lexicon; they enlist a principally endless repertoire of traits, types and sub-types (classes and sub-classes may be added to this list of course). Difference in etic systems is moreover defined according to some general standards that appear to eclipse the standards of the system itself. Etic systems therefore tend to advocate supposedly universal principles of how to establish similarity vs. difference, although, in reality, there is substantial debate as to what these principles may be or whether such endeavour is a useful undertaking. In the wild, both etic and emic systems of ordering and categorization are of course encountered in the plural but etic systems deploy external standards of order while emic systems operate via internal standards. An interesting observation by the authors in this context is that archaeological reasoning in relation to sameness and difference is almost never either exclusively etic or exclusively emic. The simple reason is that any grouping of fragments according to technological (means/modes of production) or functional considerations (use-wear, tool design, relation between form and function) based on empirical evidence is typically already infused by emic standards. The classic example from the analysis of archaeological pottery is ware groups, which reference the nexus of technological know-how and concrete practices, and which rely, in a given context, on internal, relative differentiations between the respective observed practices. Yet ignoring these distinctions would sideline significant knowledge on the past. These discussions are refreshing as they may indicate that ordering practices – when considered as an end in themselves – misconstrue the archaeological process as static and so advocate for categories, classes, and types to be carved out before any serious analysis can begin. It could in fact be argued that in doing so, they merely construct a new closed system, then emic by definition. Buccellati and Kelly-Buccellati propose an alternative without discarding the intuition that ordering archaeological materials is conditional to the inferential and knowledge-production process: they propose that typologies should be treated as arguments. Moreover, the sort of argument they have in mind is to a lesser extent ‘formal-logical’ but instead emphatically ‘dialogical’ in nature, as such argumentative form helps to combat the inherent static-ness of ordering practices the authors criticize, and so discloses a radically dynamic approach to the undertaking of fragment-whole matching. The organicist inclination to preserve ‘the dignity of fragments’ while working towards their resolution in attendant wholes and sub-wholes further gives rise to the idea that such ‘native digital fragments’ must be brought into systematic conversation with one another, acknowledging the involved complexity. To this end, the authors frame ordering work and typo-praxis as a ‘digital discourse’ and ask what the conditions and possibilities for such discourse are and how it can be facilitated. It is here that they put forward the idea that the webpage may provide an ideal epistemic model system to promote the preservation of emplaced archaeological fragments while simultaneously promoting multistranded and multi-context explorations of fragment coherence and articulation. The website enables unique forms of exploration and engagement with data and new arguments escaping the fixity of the analogue-printed which dominates current archaeological practice. Similar experiences were for example made in the context of Gardin’s ‘logicism’, leading to broadly comparable attempts to overcome the analogue with more dynamic, HTML/web-based forms of data presentation, exploration and discussion (7, 8). As such, Buccellati and Kelly-Buccellati table a range of fresh arguments for re-thinking typology beyond and with text at the same time, to enable ‘dynamic reading’ of fragment-whole relationships in an increasingly digital world. Their proposal comes thereby close to what has been termed ‘deep mapping’ in the context of critical cartographies and other spatially-inclined scholarship in the Anglophone world (9, 10). Deep maps seek to transcend the epistemological limitations of 2D-representations of spatiality on traditional maps and introduce different layers of informational depth and heterogeneity, which, similarly to the living digital webpage proposed by the authors, can be continuously extended and revised and which may also greatly promote multidisciplinary and team-based research endeavours. In the same spirit as the authors’ ‘digital discourse’, deep mapping draws attention to the knowledge potential of bringing together the heterogeneous, the etic and the emic, and to pay more attention to ‘multiplanar’ and ‘multilinear’ relationships as well as the associated relations of relations. This proposal to deploy types and typology in general as dynamic arguments is linked to the ambition to contribute to and work on the narrativization of the archaeological record without tacit (and often unconscious) conceptual pre-subscription, countering typologies that remain largely in the abstract and so have contributed to the creeping anonymity of the past.

Bibliography 1. Buccellati, G. and Kelly-Buccellati, M. (2023). The Density of Types and the Dignity of the Fragment. A website approach to archaeological typology, Zenodo, 7743834, ver. 4 peer-reviewed and recommended by Peer Community in Archaeology. https://doi.org/10.5281/zenodo.7743834. 2. Pepper, S C. (1972). World hypotheses: a study in evidence, 7. print (Univ. of California Press). 3. Hayden, B. (1984). Are Emic Types Relevant to Archaeology? Ethnohistory 31, 79–92. https://doi.org/10.2307/482057 4. Tostevin, G. B. (2011). An Introduction to the Special Issue: Reduction Sequence, Chaîne Opératoire, and Other Methods: The Epistemologies of Different Approaches to Lithic Analysis. PaleoAnthropology, 293−296. https://www.doi.org/10.4207/PA.2011.ART59 5. Tostevin, G. B. (2013). Seeing lithics: a middle-range theory for testing for cultural transmission in the pleistocene (Oakville, CT: Oxbow Books). 6. Boissinot, P. (2015). Qu’est-ce qu’un fait archéologique? (Éditions EHESS). https://doi.org/10.4000/lectures.19921 7. Gardin, J.-C. and Roux, V. (2004). The Arkeotek Project: a European Network of Knowledge Bases in the Archaeology of Techniques. Archeologia e Calcolatori 15, 25–40. 8. Husi, P. (2022). La céramique médiévale et moderne du bassin de la Loire moyenne, chrono-typologie et transformation des aires culturelles dans la longue durée (6e—17e s.) (FERACF). 9. Bodenhamer, D. J., Corrigan, J. and Harris, T. M. (2015). Deep Maps and Spatial Narratives (Indiana University Press). 10. Gillings, M., Hacigüzeller, P. and Lock, G. R. (2019). Re-mapping archaeology: critical perspectives, alternative mappings (Routledge).

| The Density of Types and the Dignity of the Fragment. A Website Approach to Archaeological Typology. | Giorgio Buccellati and Marilyn Kelly-Buccellati | <p>Typology hinges on categorization, and the two main axes of categorization are the roster and the lexicon: the first defines elements from an -emic, and the second from an (e)-tic point of view, i. e., as a closed or an open system, respectivel... |  | Antiquity, Theoretical archaeology | Shumon Tobias Hussain | 2023-03-17 09:11:46 | View | |

05 Jul 2023

Tool types and the establishment of the Late Palaeolithic (Later Stone Age) cultural taxonomic system in the Nile ValleyAlice Leplongeon https://doi.org/10.5281/zenodo.8115202Cultural taxonomic systems and the Late Palaeolithic/Later Stone Age prehistory of the Nile Valley – a critical reviewRecommended by Felix Riede, Sébastien Plutniak and Shumon Tobias Hussain and Shumon Tobias Hussain based on reviews by Giuseppina Mutri and 1 anonymous reviewer based on reviews by Giuseppina Mutri and 1 anonymous reviewer

The paper entitled “Tool types and the establishment of the Late Palaeolithic (Later Stone Age) cultural taxonomic system in the Nile Valley” submitted by A. Leplongeon offers a review of the many cultural taxonomic in use for the prehistory – especially the Late Palaeolithic/Late Stone Age – of the Nile Valley (Leplongeon 2023). This paper was first developed for a special conference session convened at the EAA annual meeting in 2021 and is intended for an edited volume on the topic of typology and taxonomy in archaeology. Issues of cultural taxonomy have recently risen to the forefront of archaeological debate (Reynolds and Riede 2019; Ivanovaitė et al. 2020; Lyman 2021). Archaeological systematics, most notably typology, have roots in the research history of a particular region and period (e.g. Plutniak 2022); commonly, different scholars employ different and at times incommensurable systems, often leading to a lack of clarity and inter-regional interoperability. African prehistory is not exempt from this debate (e.g. Wilkins 2020) and, in fact, such a situation is perhaps nowhere more apparent than in the iconic Nile Valley. The Nile Valley is marked by a complex colonial history and long-standing archaeological interest from a range of national and international actors. It is also a vital corridor for understanding human dispersals out of and into Africa, and along the North African coastal zone. As Leplongeon usefully reviews, early researchers have, as elsewhere, proposed a variety of archaeological cultures, the legacies of which still weigh in on contemporary discussions. In the Nile Valley, these are the Kubbaniyan (23.5-19.3 ka cal. BP), the Halfan (24-19 ka cal. BP), the Qadan (20.2-12 ka cal BP), the Afian (16.8-14 ka cal. BP) and the Isnan (16.6-13.2 ka cal. BP) but their temporal and spatial signatures remain in part poorly constrained, or their epistemic status debated. Leplongeon’s critical and timely chronicle of this debate highlights in particular the vital contributions of the many female prehistorians who have worked in the region – Angela Close (e.g. 1978; 1977) and Maxine Kleindienst (e.g. 2006) to name just a few of the more recent ones – and whose earlier work had already addressed, if not even solved many of the pressing cultural taxonomic issues that beleaguer the Late Palaeolithic/Later Stone Age record of this region. Leplongeon and colleagues (Leplongeon et al. 2020; Mesfin et al. 2020) have contributed themselves substantially to new cultural taxonomic research in the wider region, showing how novel quantitative methods coupled with research-historical acumen can flag up and overcome the shortcomings of previous systematics. Yet, as Leplongeon also notes, the cultural taxonomic framework for the Nile Valley specifically has proven rather robust and does seem to serve its purpose as a broad chronological shorthand well. By the same token, she urges due caution when it comes to interpreting these lithic-based taxonomic units in terms of past social groups. Cultural systematics are essential for such interpretations, but age-old frameworks are often not fit for this purpose. New work by Leplongeon is likely to not only continue the long tradition of female prehistorians working in the Nile Valley but also provides an epistemologically and empirically more robust platform for understanding the social and ecological dynamics of Late Palaeolithic/Later Stone Age communities there.

Bibliography Close, Angela E. 1977. The Identification of Style in Lithic Artefacts from North East Africa. Mémoires de l’Institut d’Égypte 61. Cairo: Geological Survey of Egypt. Close, Angela E. 1978. “The Identification of Style in Lithic Artefacts.” World Archaeology 10 (2): 223–37. https://doi.org/10.1080/00438243.1978.9979732 Ivanovaitė, Livija, Serwatka, Kamil, Steven Hoggard, Christian, Sauer, Florian and Riede, Felix. 2020. “All These Fantastic Cultures? Research History and Regionalization in the Late Palaeolithic Tanged Point Cultures of Eastern Europe.” European Journal of Archaeology 23 (2): 162–85. https://doi.org/10.1017/eaa.2019.59 Kleindienst, M. R. 2006. “On Naming Things: Behavioral Changes in the Later Middle to Earlier Late Pleistocene, Viewed from the Eastern Sahara.” In Transitions Before the Transition. Evolution and Stability in the Middle Paleolithic and Middle Stone Age, edited by E. Hovers and Steven L. Kuhn, 13–28. New York, NY: Springer. Leplongeon, Alice. 2023. “Tool Types and the Establishment of the Late Palaeolithic (Later Stone Age) Cultural Taxonomic System in the Nile Valley.” https://doi.org/10.5281/zenodo.8115202 Leplongeon, Alice, Ménard, Clément, Bonhomme, Vincent and Bortolini, Eugenio. 2020. “Backed Pieces and Their Variability in the Later Stone Age of the Horn of Africa.” African Archaeological Review 37 (3): 437–68. https://doi.org/10.1007/s10437-020-09401-x Lyman, R. Lee. 2021. “On the Importance of Systematics to Archaeological Research: The Covariation of Typological Diversity and Morphological Disparity.” Journal of Paleolithic Archaeology 4 (1): 3. https://doi.org/10.1007/s41982-021-00077-6 Mesfin, Isis, Leplongeon, Alice, Pleurdeau, David, and Borel, Antony. 2020. “Using Morphometrics to Reappraise Old Collections: The Study Case of the Congo Basin Middle Stone Age Bifacial Industry.” Journal of Lithic Studies 7 (1): 1–38. https://doi.org/10.2218/jls.4329 Plutniak, Sébastien. 2022. “What Makes the Identity of a Scientific Method? A History of the ‘Structural and Analytical Typology’ in the Growth of Evolutionary and Digital Archaeology in Southwestern Europe (1950s–2000s).” Journal of Paleolithic Archaeology 5 (1): 10. https://doi.org/10.1007/s41982-022-00119-7 Reynolds, Natasha, and Riede, Felix. 2019. “House of Cards: Cultural Taxonomy and the Study of the European Upper Palaeolithic.” Antiquity 93 (371): 1350–58. https://doi.org/10.15184/aqy.2019.49 Wilkins, Jayne. 2020. “Is It Time to Retire NASTIES in Southern Africa? Moving Beyond the Culture-Historical Framework for Middle Stone Age Lithic Assemblage Variability.” Lithic Technology 45 (4): 295–307. https://doi.org/10.1080/01977261.2020.1802848 | Tool types and the establishment of the Late Palaeolithic (Later Stone Age) cultural taxonomic system in the Nile Valley | Alice Leplongeon | <p>Research on the prehistory of the Nile Valley has a long history dating back to the late 19th century. But it is only between the 1960s and 1980s, that numerous cultural entities were defined based on tool and core typologies; this habit stoppe... |  | Africa, Lithic technology, Upper Palaeolithic | Felix Riede | 2023-03-08 19:25:28 | View | |

05 Jun 2023

SEAHORS: Spatial Exploration of ArcHaeological Objects in R ShinyROYER, Aurélien, DISCAMPS, Emmanuel, PLUTNIAK, Sébastien, THOMAS, Marc https://doi.org/10.5281/zenodo.7957154Analyzing piece-plotted artifacts just got simpler: A good solution to the wrong problem?Recommended by Reuven Yeshurun based on reviews by Frédéric Santos, Jacqueline Meier and Maayan LevPaleolithic archaeologists habitually measure 3-coordinate data for artifacts in their excavations. This was first done manually, and in the last three decades it is usually performed by a total station and associated hardware. While the field recording procedure is quite straightforward, visualizing and analyzing the data are not, often requiring specialized proprietary software or coding expertise. Here, Royer and colleagues (2023) present the SEAHORS application, an elegant solution for the post-excavation analysis of artifact coordinate data that seems to be instantly useful for numerous archaeologists. SEAHORS allows one to import and organize field data (Cartesian coordinates and point description), which often comes in a variety of formats, and to create various density and distribution plots. It is specifically adapted to the needs of archaeologists, is free and accessible, and much simpler to use than many commercial programs. The authors further demonstrate the use of the application in the post-excavation analysis of the Cassenade Paleolithic site (see also Discamps et al., 2019). While in no way detracting from my appreciation of Royer et al.’s (2023) work, I would like to play the devil’s advocate by asking whether, in the majority of cases, field recording of artifacts in three coordinates is warranted. Royer et al. (2023) regard piece plotting as “…indispensable to propose reliable spatial planimetrical and stratigraphical interpretations” but this assertion does not hold in all (or most) cases, where careful stratigraphic excavation employing thin volumetric units would do just as well. Moreover, piece-plotting has some serious drawbacks. The recording often slows excavations considerably, beyond what is needed for carefully exposing and documenting the artifacts in their contexts, resulting in smaller horizontal and vertical exposures (e.g., Gilead, 2002). This typically hinders a fuller stratigraphic and contextual understanding of the excavated levels and features. Even worse, the method almost always creates a biased sample of “coordinated artifacts”, in which the most important items for understanding spatial patterns and site-formation processes – the small ones – are underrepresented. Some projects run the danger of treating the coordinated artifacts as bearing more significance than the sieve-recovered items, preferentially studying the former with no real justification. Finally, the coordinated items often go unassigned to a volumetric unit, effectively disconnecting them from other types of data found in the same depositional contexts. The advantages of piece-plotting may, in some cases, offset the disadvantages. But what I find missing in the general discourse (certainly not in the recommended preprint) is the “theory” behind the seemingly technical act of 3-coordinate recording (Yeshurun, 2022). Being in effect a form of sampling, this practice needs a rethink about where and how to be applied; what depositional contexts justify it, and what the goals are. These questions should determine if all “visible” artifacts are plotted, or just an explicitly defined sample of them (e.g., elongated items above a certain length threshold, which should be more reliable for fabric analysis), or whether the circumstances do not actually justify it. In the latter case, researchers sometimes opt for using “virtual coordinates” within in each spatial unit (typically 0.5x0.5 m), essentially replicating the data that is generated by “real” coordinates and integrating the sieve-recovered items as well. In either case, Royer et al.’s (2023) solution for plotting and visualizing labeled points within intra-site space would indeed be an important addition to the archaeologists’ tool kits.

References cited Discamps, E., Bachellerie, F., Baillet, M. and Sitzia, L. (2019). The use of spatial taphonomy for interpreting Pleistocene palimpsests: an interdisciplinary approach to the Châtelperronian and carnivore occupations at Cassenade (Dordogne, France). Paleoanthropology 2019, 362–388. https://doi.org/10.4207/PA.2019.ART136 Gilead, I. (2002). Too many notes? Virtual recording of artifacts provenance. In: Niccolucci, F. (Ed.). Virtual Archaeology: Proceedings of the VAST Euroconference, Arezzo 24–25 November 2000. BAR International Series 1075, Archaeopress, Oxford, pp. 41–44. Royer, A., Discamps, E., Plutniak, S. and Thomas, M. (2023). SEAHORS: Spatial Exploration of ArcHaeological Objects in R Shiny Zenodo, 7957154, ver. 2 peer-reviewed and recommended by Peer Community in Archaeology. https://doi.org/10.5281/zenodo.7929462 Yeshurun, R. (2022). Intra-site analysis of repeatedly occupied camps: Sacrificing “resolution” to get the story. In: Clark A.E., Gingerich J.A.M. (Eds.). Intrasite Spatial Analysis of Mobile and Semisedentary Peoples: Analytical Approaches to Reconstructing Occupation History. University of Utah Press, pp. 27–35.

| SEAHORS: Spatial Exploration of ArcHaeological Objects in R Shiny | ROYER, Aurélien, DISCAMPS, Emmanuel, PLUTNIAK, Sébastien, THOMAS, Marc | <p style="text-align: justify;">This paper presents SEAHORS, an R shiny application available as an R package, dedicated to the intra-site spatial analysis of piece-plotted archaeological remains. This open-source script generates 2D and 3D scatte... |  | Computational archaeology, Spatial analysis, Theoretical archaeology | Reuven Yeshurun | 2023-02-24 16:01:44 | View | |

28 Aug 2023

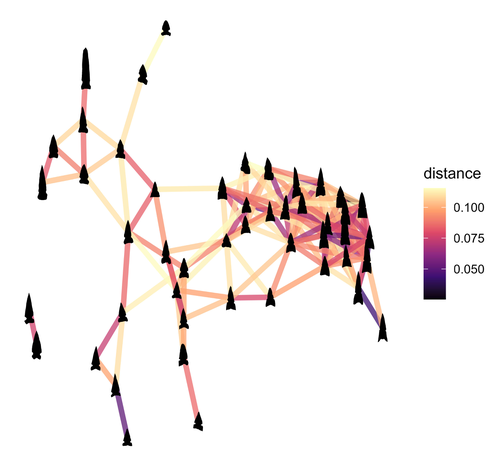

Geometric Morphometric Analysis of Projectile Points from the Southwest United StatesRobert J. Bischoff https://doi.org/10.31235/osf.io/a6wjc2D Geometric Morphometrics of Projectile Points from the Southwestern United StatesRecommended by Adrian L. Burke based on reviews by James Conolly and 1 anonymous reviewerBischoff (2023) is a significant contribution to the growing field of geometric morphometric analysis in stone tool analysis. The subject is projectile points from the southwestern United States. Projectile point typologies or systematics remain an important part of North American archaeology, and in fact these typologies continue to be used primarily as cultural-historical markers. This article looks at projectile point types using a 2D image geometric morphometric analysis as a way of both improving on projectile point types but also testing if these types are in fact based in measurable reality. A total of 164 point outlines are analyzed using Elliptical Fourier, semilandmark and landmark analyses. The author also uses a network analysis to look at possible relationships between projectile point morphologies in space. This is a clever way of working around the predefined distributions of projectile point types, some of which are over 100 years old. Because of the dynamic nature of stone tools in terms of their use, reworking and reuse, this article can also provide solutions for studying the dynamic nature of stone tools. This article therefore also has a wide applicability to other stone tool analyses. Reference Bischoff, R. J. (2023) Geometric Morphometric Analysis of Projectile Points from the Southwest United States, SocArXiv, a6wjc, ver. 8 peer-reviewed and recommended by Peer Community in Archaeology. https://doi.org/10.31235/osf.io/a6wjc | Geometric Morphometric Analysis of Projectile Points from the Southwest United States | Robert J. Bischoff | <p style="text-align: justify;">Traditional analyses of projectile points often use visual identification, the presence or absence of discrete characteristics, or linear measurements and angles to classify points into distinct types. Geometric mor... |  | Archaeometry, Computational archaeology, Lithic technology, North America | Adrian L. Burke | 2022-12-18 03:38:14 | View | |

26 Mar 2024

What is a form? On the classification of archaeological pottery.Philippe Boissinot https://doi.org/10.5281/zenodo.10718433Abstract and Concrete – Querying the Metaphysics and Geometry of Pottery ClassificationRecommended by Shumon Tobias Hussain , Felix Riede and Sébastien Plutniak , Felix Riede and Sébastien Plutniak based on reviews by 2 anonymous reviewers based on reviews by 2 anonymous reviewers

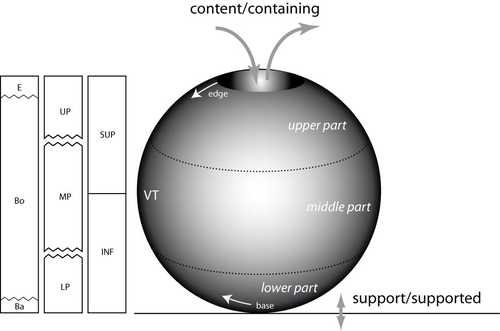

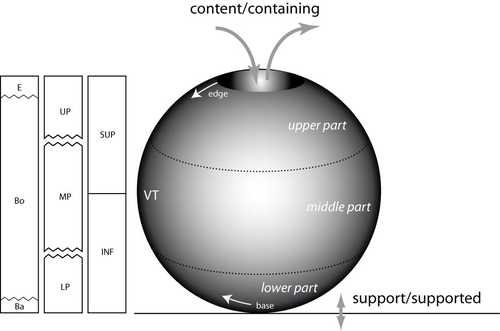

“What is a form? On the classification of archaeological pottery” by P. Boissinot (1) is a timely contribution to broader theoretical reflections on classification and ordering practices in archaeology, including type-construction and justification. Boissinot rightly reminds us that engagement with the type concept always touches upon the uneasy relationship between the abstract and the concrete, alternatively cast as the ongoing struggle in knowledge production between idealization and particularization. Types are always abstract and as such both ‘more’ and ‘less’ than the concrete objects they refer to. They are ‘more’ because they establish a higher-order identity of variously heterogeneous, concrete objects and they are ‘less’ because they necessarily reduce the richness of the concrete and often erase it altogether. The confusion that types evoke in archaeology and elsewhere has therefore a lot to do with the fact that types are simply not spatiotemporally distinct particulars. As abstract entities, types so almost automatically re-introduce the question of universalism but they do not decide this question, and Boissinot also tentatively rejects such ambitions. In fact, with Boissinot (1) it may be said that universality is often precisely confused with idealization, which is indispensable to all archaeological ordering practices. Idealization, increasingly recognized as an important epistemic operation in science (2, 3), paradoxically revolves around the deliberate misrepresentation of the empirical systems being studied, with models being the paradigm cases (4). Models can go so far as to assume something strictly false about the phenomena under consideration in order to advance their epistemic goals. In the words of Angela Potochnik (5), ‘the role of idealization in securing understanding distances understanding from truth but […] this understanding nonetheless gives rise to scientific knowledge’. The affinity especially to models may in part explain why types are so controversial and are often outright rejected as ‘real’ or ‘useful’ by those who only recognize the existence of concrete particulars (nominalism). As confederates of the abstract, types thus join the ranks of mathematics and geometry, which the author identifies as prototypical abstract systems. Definitions are also abstract. According to Boissinot (1), they delineate a ‘position of limits’, and the precision and rigorousness they bring comes at the cost of subjectivity. This unites definitions and types, as both can be precise and clear-cut but they can never be strictly singular or without alternative – in order to do so, they must rely on yet another higher-order system of external standards, and so ad infinitum. Boissinot (1) advocates a mathematical and thus by definition abstract approach to archaeological type-thinking in the realm of pottery, as the abstractness of this approach affords relatively rigorous description based on the rules of geometry. Importantly, this choice is not a mysterious a priori rooted in questionable ideas about the supposed superiority of such an approach but rather is the consequence of a careful theoretical exploration of the particularities (domain-specificities) of pottery as a category of human practice and materiality. The abstract thus meets the concrete again: objects of pottery, in sharp contrast to stone artefacts for example, are the product of additive processes. These processes, moreover, depend on the ‘fusion’ of plastic materials and the subsequent fixation of the resulting configuration through firing (processes which, strictly speaking, remove material, such as stretching, appear to be secondary vis-à-vis global shape properties). Because of this overriding ‘fusion’ of pottery, the identification of parts, functional or otherwise, is always problematic and indeterminate to some extent. As products of fusion, parts and wholes represent an integrated unity, and this distinguishes pottery from other technologies, especially machines. The consequence is that the presence or absence of parts and their measurements may not be a privileged locus of type-construction as they are in some biological contexts for example. The identity of pottery objects is then generally bound to their fusion. As a ‘plastic montage’ rather than an assembly of parts, individual parts cannot simply be replaced without threatening the identity of the whole. Although pottery can and must sometimes be repaired, this renders its objects broadly morpho-static (‘restricted plasticity’) rather than morpho-dynamic, which is a condition proper to other material objects such as lithic (use and reworking) and metal artefacts (deformation) but plays out in different ways there. This has a number of important implications, namely that general shape and form properties may be expected to hold much more relevant information than in technological contexts characterized by basal modularity or morpho-dynamics. It is no coincidence that ‘fusion’ is also emphasized by Stephen C. Pepper (6) as a key category of what he calls contextualism. Fusion for Pepper pays dividends to the interpenetration of different parts and relations, and points to a quality of wholes which cannot be reduced any further and integrates the details into a ‘more’. Pepper maintains that ‘fusion, in other words, is an agency of simplification and organization’ – it is the ‘ultimate cosmic determinator of a unit’ (p. 243-244, emphasis added). This provides metaphysical reasons to look at pottery from a whole-centric perspective and to foreground the agency of its materiality. This is precisely what Boissinot (1) does when he, inspired by the great techno-anthropologist François Sigaut (7), gestures towards the fact that elementarily a pot is ‘useful for containing’. He thereby draws attention not to the function of pottery objects but to what pottery as material objects do by means of their material agency: they disclose a purposive tension between content and container, the carrier and carried as well as inclusion and exclusion, which can also be understood as material ‘forces’ exerted upon whatever is to be contained. This, and not an emic reading of past pottery use, leads to basic qualitative distinctions between open and closed vessels following Anna O. Shepard’s (8) three basic pottery categories: unrestricted, restricted, and necked openings. These distinctions are not merely intuitive but attest to the object-specificity of pottery as fused matter. This fused dimension of pottery also leads to a recognition that shapes have geometric properties that emerge from the forced fusion of the plastic material worked, and Boissinot (1) suggests that curvature is the most prominent of such features, which can therefore be used to describe ‘pure’ pottery forms and compare abstract within-pottery differences. A careful mathematical theorization of curvature in the context of pottery technology, following George D. Birkhoff (9), in this way allows to formally distinguish four types of ‘geometric curves’ whose configuration may serve as a basis for archaeological object grouping. The idealization involved in this proposal is not accidental but deliberately instrumental – it reminds us that type-thinking in archaeology cannot escape the abstract. It is notable here that the author does not suggest to simply subject total pottery form to some sort of geometric-morphometric analysis but develops a proposal that foregrounds a limited range of whole-based geometric properties (in contrast to part-based) anchored in general considerations as to the material specificity of pottery as quasi-species of objects. As Boissinot (1) notes himself, this amounts to a ‘naturalization’ of archaeological artefacts and offers somewhat of an alternative (a third way) to the old discussion between disinterested form analysis and functional (and thus often theory-dependent) artefact groupings. He thereby effectively rejects both of these classic positions because the first ignores the particularities of pottery and the real function of artefacts is in most cases archaeologically inaccessible. In this way, some clear distance is established to both ethnoarchaeology and thing studies as a project. Attending to the ‘discipline of things’ proposal by Bjørnar Olsen and others (10, 11), and by drawing on his earlier work (12), Boissinot interestingly notes that archaeology – never dealing with ‘complete societies’ – could only be ‘deficient’. This has mainly to do with the underdetermination of object function by the archaeological record (and the confusion between function and functionality) as outlined by the author. It seems crucial in this context that Boissinot does not simply query ‘What is a thing?’ as other thing-theorists have previously done, but emphatically turns this question into ‘In what way is it not the same as something else?’. He here of course comes close to Olsen’s In Defense of Things insofar as the ‘mode of being’ or the ‘ontology’ of things is centred. What appears different, however, is the emphasis on plurality and within-thing heterogeneity on the level of abstract wholes. With Boissinot, we always have to speak of ontologies and modes of being and those are linked to different kinds of things and their material specificities. Theorizing and idealizing these specificities are considered central tasks and goals of archaeological classification and typology. As such, this position provides an interesting alternative to computational big-data (the-more-the-better) approaches to form and functionally grounded type-thinking, yet it clearly takes side in the debate between empirical and theoretical type-construction as essential object-specific properties in the sense of Boissinot (1) cannot be deduced in a purely data-driven fashion. Boissinot’s proposal to re-think archaeological types from the perspective of different species of archaeological objects and their abstract material specificities is thought-provoking and we cannot stop wondering what fruits such interrogations would bear in relation to other kinds of objects such as lithics, metal artefacts, glass, and so forth. In addition, such meta-groupings are inherently problematic themselves, and they thus re-introduce old challenges as to how to separate the relevant super-wholes, technological genesis being an often-invoked candidate discriminator. The latter may suggest that we cannot but ultimately circle back on the human context of archaeological objects, even if we, for both theoretical and epistemological reasons, wish to embark on strictly object-oriented archaeologies in order to emancipate ourselves from the ‘contamination’ of language and in-built assumptions.

Bibliography 1. Boissinot, P. (2024). What is a form? On the classification of archaeological pottery, Zenodo, 7429330, ver. 4 peer-reviewed and recommended by Peer Community in Archaeology. https://doi.org/10.5281/zenodo.10718433 2. Fletcher, S.C., Palacios, P., Ruetsche, L., Shech, E. (2019). Infinite idealizations in science: an introduction. Synthese 196, 1657–1669. https://doi.org/10.1007/s11229-018-02069-6 3. Potochnik, A. (2017). Idealization and the aims of science (University of Chicago Press). https://doi.org/10.7208/chicago/9780226507194.001.0001 4. J. Winkelmann, J. (2023). On Idealizations and Models in Science Education. Sci & Educ 32, 277–295. https://doi.org/10.1007/s11191-021-00291-2 5. Potochnik, A. (2020). Idealization and Many Aims. Philosophy of Science 87, 933–943. https://doi.org/10.1086/710622 6. Pepper S. C. (1972). World hypotheses: a study in evidence, 7. print (University of California Press). 7. Sigaut, F. (1991). “Un couteau ne sert pas à couper, mais en coupant. Structure, fonctionnement et fonction dans l’analyse des objets” in 25 Ans d’études Technologiques En Préhistoire. Bilan et Perspectives (Association pour la promotion et la diffusion des connaissances archéologiques), pp. 21–34. 8. Shepard, A. O. (1956). Ceramics for the Archeologist (Carnegie Institution of Washington n° 609). 9. Birkhoff G. D. (1933). Aesthetic Measure (Harvard University Press). 10. Olsen, B. (2010). In defense of things: archaeology and the ontology of objects (AltaMira Press). https://doi.org/10.1093/jdh/ept014 11. Olsen, B., Shanks, M., Webmoor, T., Witmore, C. (2012). Archaeology: the discipline of things (University of California Press). https://doi.org/10.1525/9780520954007 12. Boissinot, P. (2011). “Comment sommes-nous déficients ?” in L’archéologie Comme Discipline ? (Le Seuil), pp. 265–308. | What is a form? On the classification of archaeological pottery. | Philippe Boissinot | <p>The main question we want to ask here concerns the application of philosophical considerations on identity about artifacts of a particular kind (pottery). The purpose is the recognition of types and their classification, which are two of the ma... |  | Ceramics, Theoretical archaeology | Shumon Tobias Hussain | 2022-12-13 15:04:45 | View |

MANAGING BOARD

Alain Queffelec

Marta Arzarello

Ruth Blasco

Otis Crandell

Luc Doyon

Sian Halcrow

Emma Karoune

Aitor Ruiz-Redondo

Philip Van Peer